RNIB Accessibility Toolkit

2024-25

Genre

Accessibility Toolkit / VR Application

My Role

Developer & Designer (Navigation & Accessibility)

C++

VR

C++

VR

Platform

Meta Quest Pro

About The Project

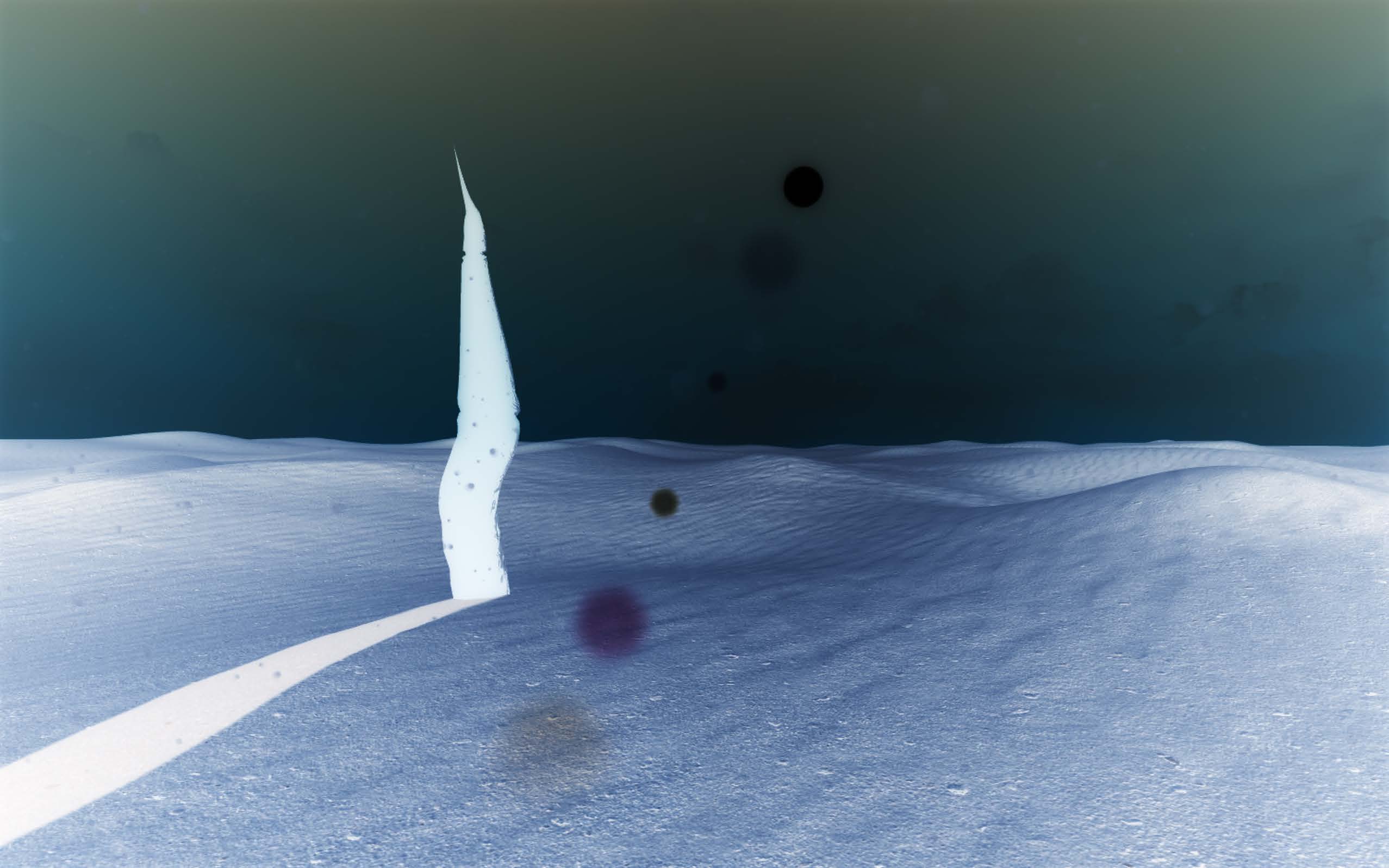

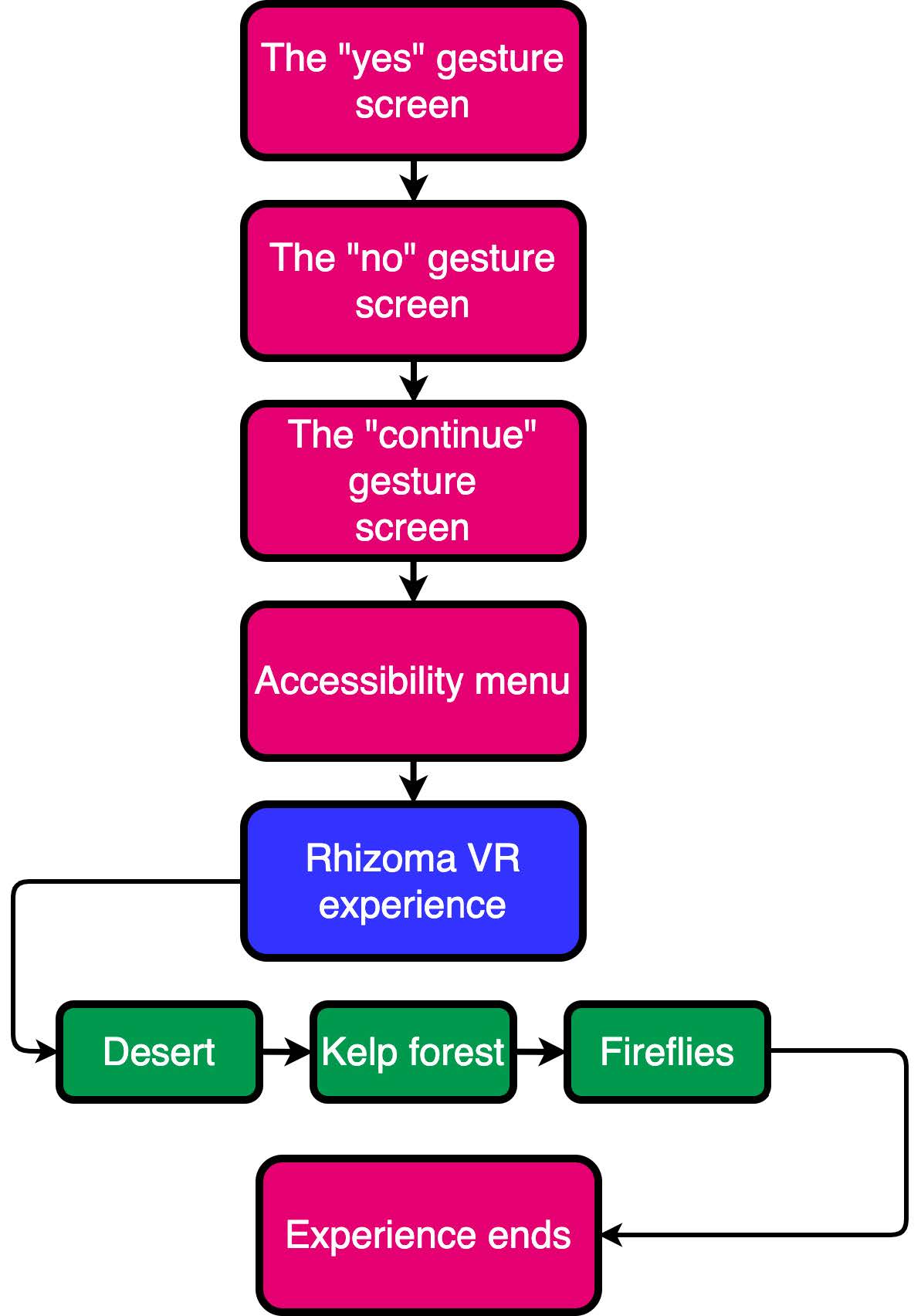

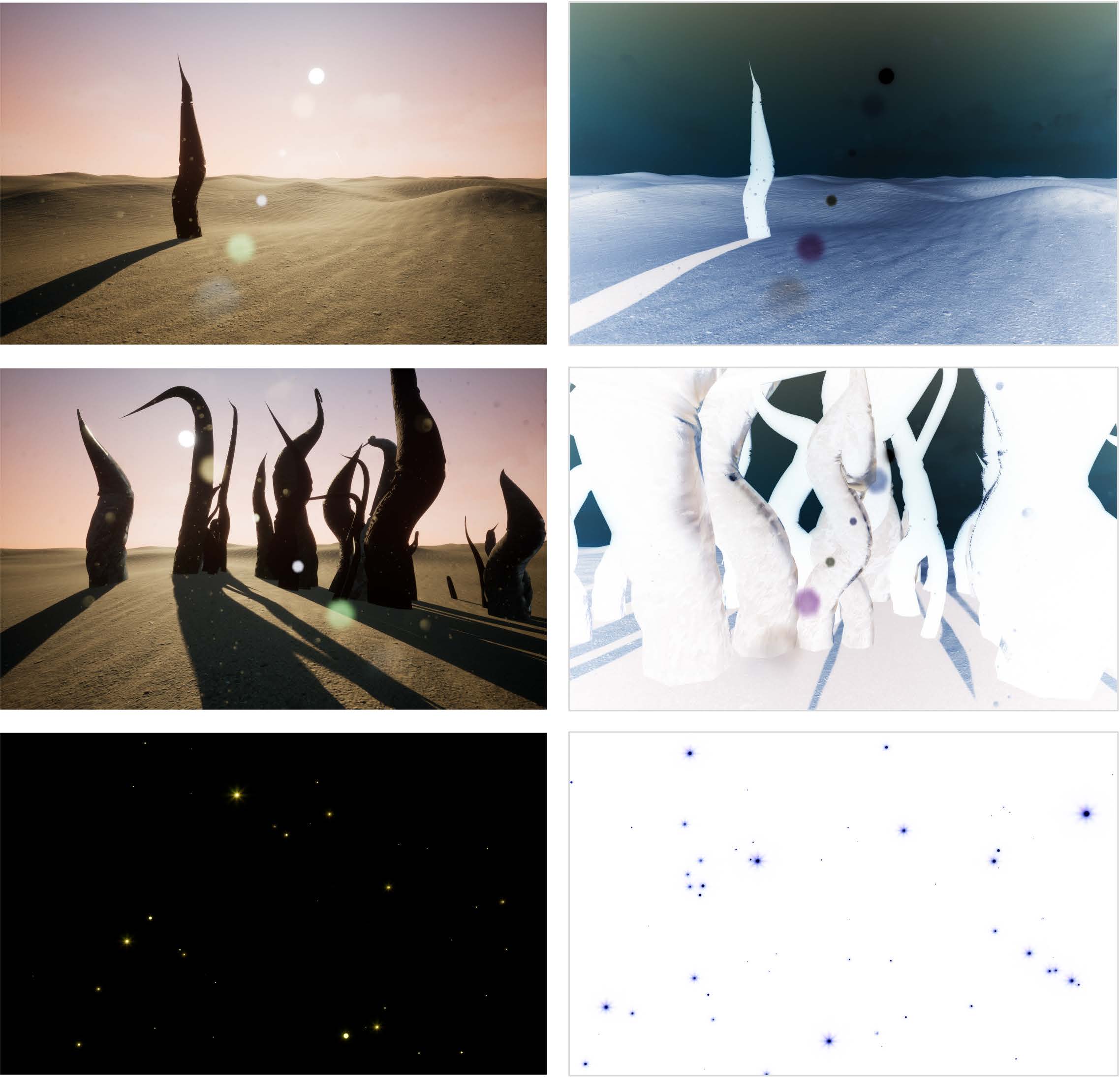

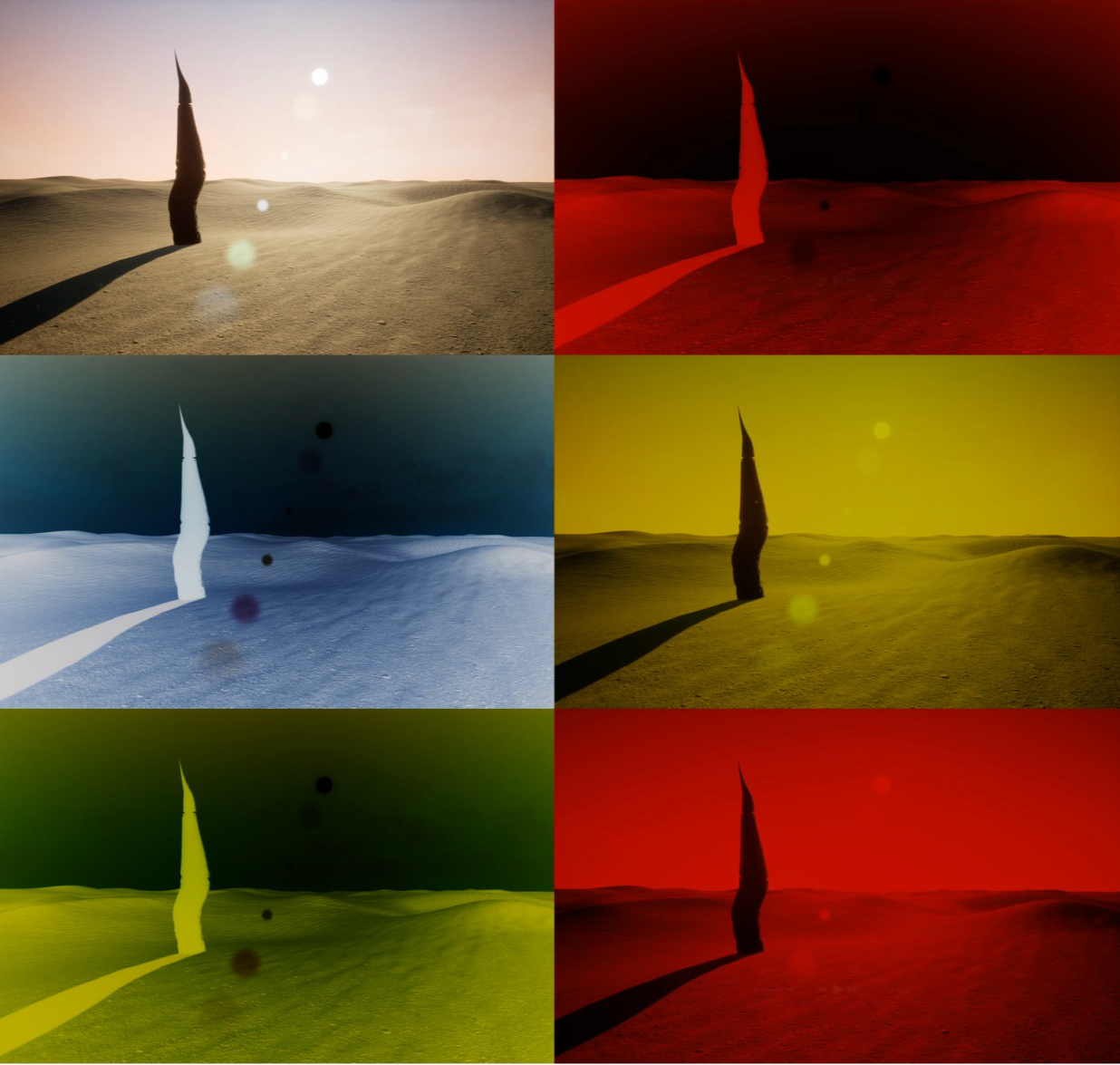

This project involved developing and designing the navigation of VR experiences for accessibility in this setting, specifically targeted towards the GLAM (galleries, libraries, archives, and museums) sector to support development of accessible XR experiences and installations. The work focused on making XR technologies, including virtual reality (VR), mixed reality (MR), and augmented reality (AR), accessible for blind and partially sighted people.

This project involved repurposing the immersive VR architecture of the Rhizoma installation into a specialized research tool for the Royal National Institute of Blind People (RNIB). The primary objective was to explore how Virtual Reality could be democratized for users with varying degrees of visual impairment. By stripping back the complexity of standard gaming conventions, we transformed a purely artistic exhibit into a functional toolkit for testing inclusive design standards, proving that immersive storytelling can be experienced without relying solely on visual fidelity or manual dexterity.

Working on this project was an incredible journey. I thoroughly enjoyed collaborating with the team and my talented colleagues. The interdisciplinary nature of the team, based in the Faculty of Design, Informatics and Business at Abertay University, allowed for a rich exchange of ideas and innovative solutions to accessibility challenges in XR.

This work led to the creation of actionable insights and a toolkit for the GLAM sector, as well as the augmentation of a VR prototype, Rhizoma VR, with features to improve accessibility for BPS.

My Role

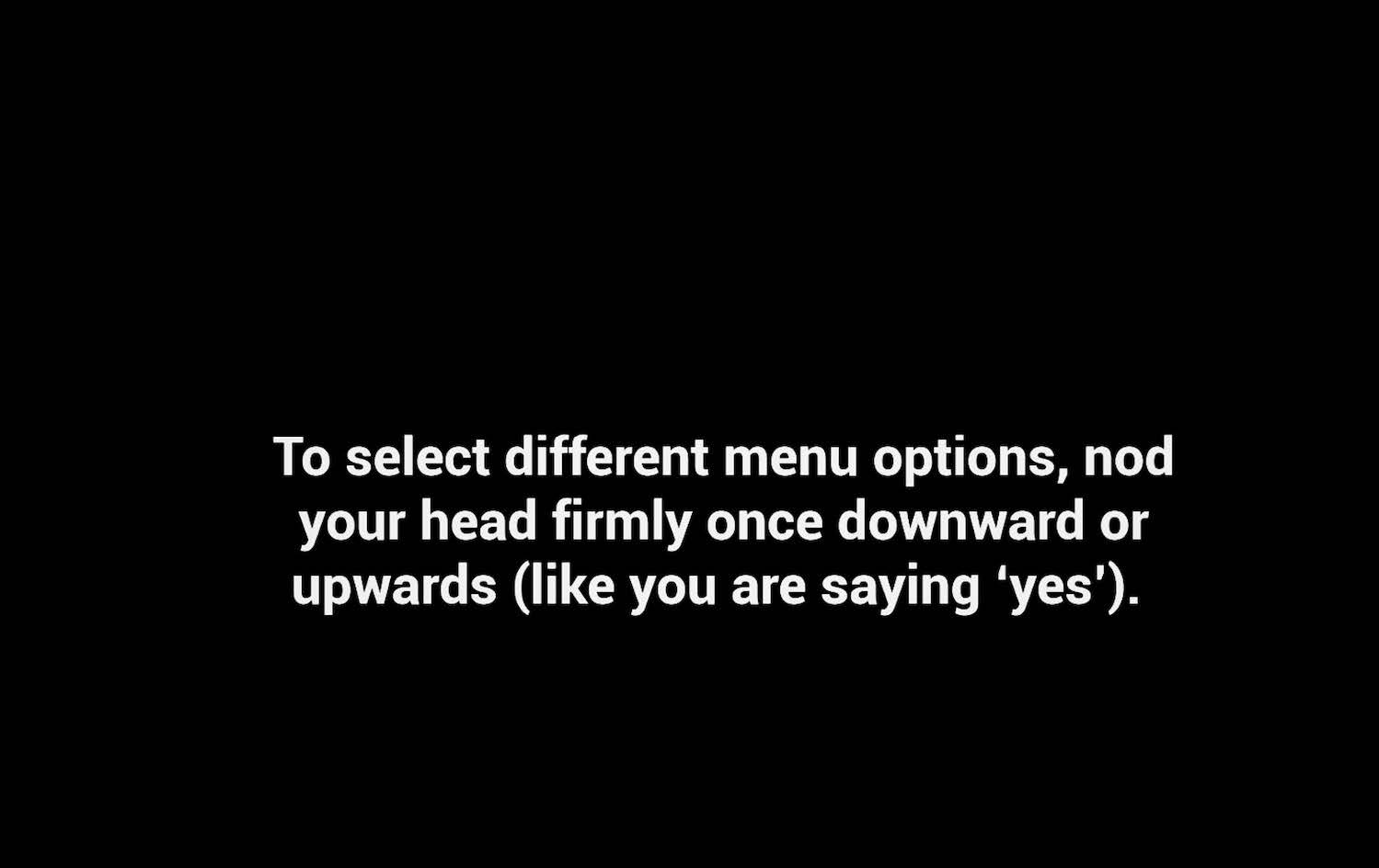

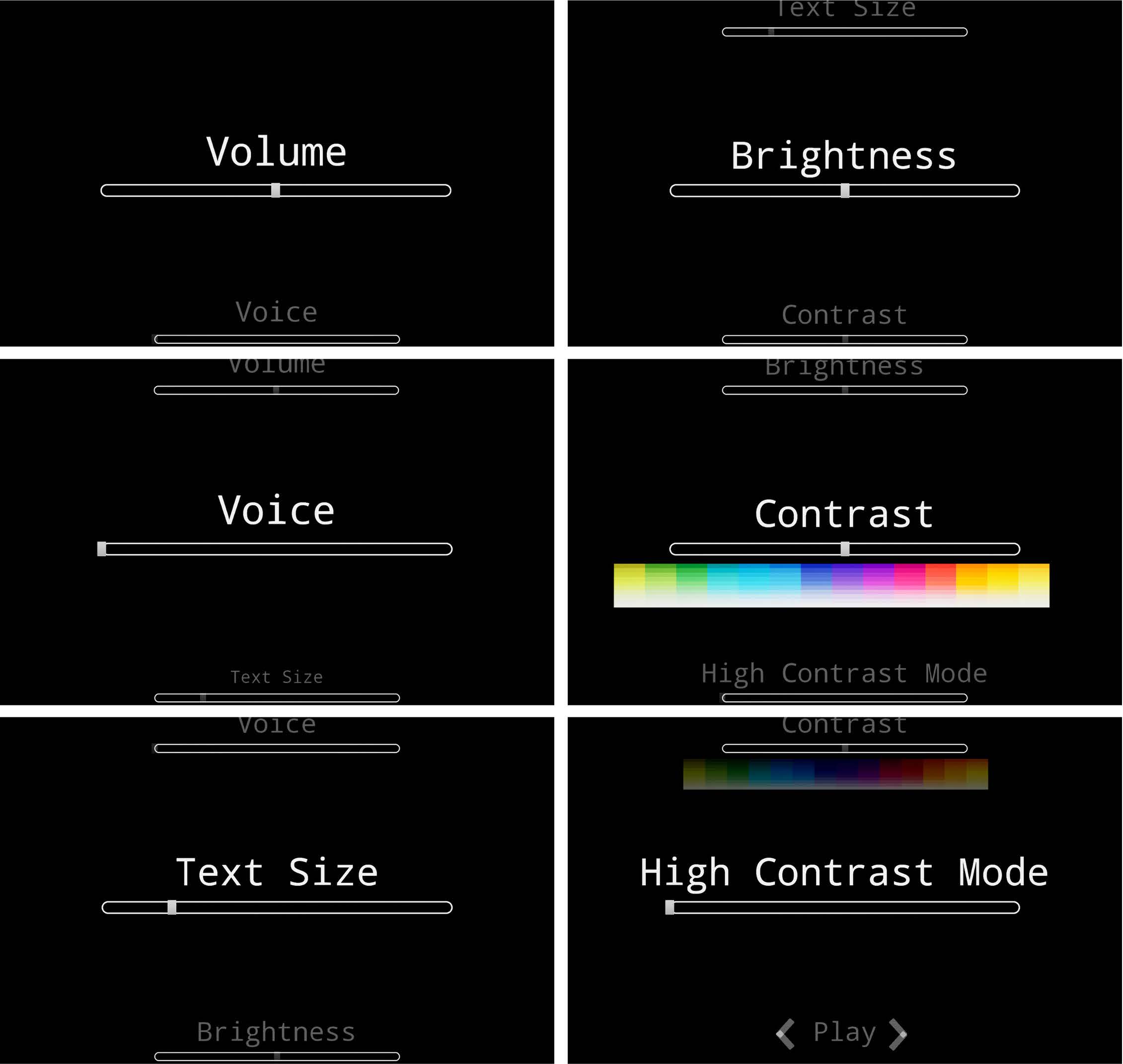

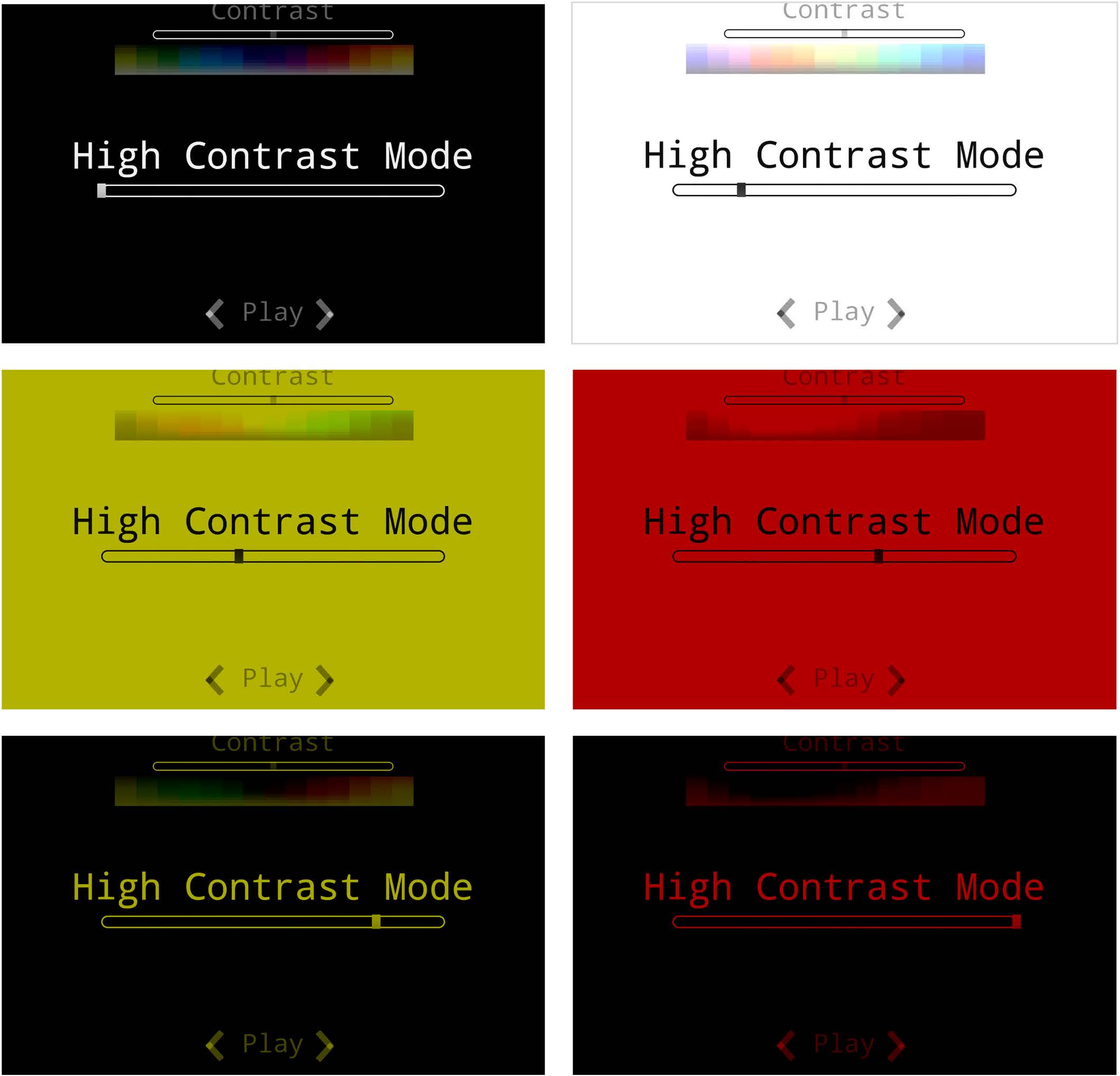

My role involved designing and developing navigation systems and integrating accessibility features. A key part of this was using Eleven Labs for Text-to-Speech (TTS) and caching generated texts appropriately using Unreal Engine. This ensured that navigation and content were accessible through auditory feedback.

I led the technical re-engineering of the original Rhizoma codebase to meet the rigorous accessibility standards required for this research. My focus was on dismantling the existing interaction mechanics and rebuilding them from the ground up to support non-standard inputs. Working closely with RNIB representatives and my development team, I iterated on the design based on direct user feedback, implementing the voice and gaze systems that defined the final experience. I viewed my role as a technical facilitator—translating the specific, lived needs of visually challenged users into concrete software solutions that the wider team could build upon.